Using Playwright for GenAI application testing

Posted By

Pratima Jadhav

Generative AI (GenAI) applications such as chatbots, content generators, code assistants, and AI-driven recommendation systems are now widely used in modern software systems. These applications are highly dynamic, context-sensitive, and often depend on real-time user interactions with AI models.

Testing GenAI applications is more complex than testing traditional web or API-based systems. AI responses may vary for the same input, interfaces often support multiple input types such as text or images, and responses are generated asynchronously with unpredictable latency. In addition, many GenAI products rely on conversational UI workflows that must be validated across multiple turns of interaction.

To handle these challenges effectively, teams need automation tools that are reliable, flexible, and designed for modern web applications. Playwright is well suited for this purpose due to its strong support for dynamic UIs, built-in waiting mechanisms, and ability to combine UI and API testing in a single framework.

Why Playwright for GenAI testing

Playwright provides several features that make it suitable for testing GenAI applications:

| Feature | Benefit for GenAI Testing |

|---|---|

| Cross-browser automation | Test AI apps in Chromium, Firefox, and WebKit to ensure consistent behavior |

| Auto-waiting & retries | Handles dynamic content that loads asynchronously |

| Multi-context browser sessions | Simulate multiple AI users interacting in parallel |

| Tracing & screenshot capture | Record AI responses and UI state for debugging and compliance |

| API testing integration | Test AI model endpoints directly alongside UI tests |

| Parallel execution | Run multiple prompts and scenarios simultaneously to save time |

Playwright’s architecture allows testers to combine UI testing, API validation, and asynchronous AI interaction in a single framework.

Test automation best practices for GenAI applications

Test automation for GenAI systems should validate behavior, intent, and safety rather than exact text. With Playwright, teams can rely on auto-waiting, resilient selectors, and parallel execution to handle asynchronous AI responses without flaky tests. Keyword- and regex-based assertions, combined with tracing and network logs, help keep automation stable as AI outputs evolve. This approach ensures test automation remains reliable, observable, and CI/CD-ready for modern GenAI applications.

Comparison matrix: Playwright vs traditional test automation for GenAI

| Capability | Traditional UI Automation | Playwright for GenAI Testing |

|---|---|---|

| Handling non-deterministic outputs | Weak (exact match focused) | Strong (keyword, regex, intent-based validation) |

| Async response handling | Manual waits, flaky | Built-in auto-waiting & retries |

| Conversational workflows | Limited support | Natural multi-step interaction handling |

| API + UI testing | Separate tools needed | Unified framework |

| Parallel user simulation | Complex setup | Native parallel execution |

| Debugging AI failures | Screenshots only | Traces, screenshots, network logs |

| CI/CD readiness | Moderate | First-class CI/CD support |

Testing scenarios for GenAI applications

Here are common test scenarios for GenAI apps and how Playwright can help:

Input validation

Automation should validate different types of user inputs. For text prompts, tests can verify maximum length limits, prohibited characters, prompt injection attempts, and edge-case phrasing. For applications that support voice or image inputs, automation can validate file upload behavior, supported formats, and resolution constraints.

Output validation

Because AI responses can vary, validation should focus on structure, intent, and safety rather than exact text. Tests can verify that:

- A response is generated within an acceptable time

- The response follows expected formatting (markdown, JSON, bullet points)

- Required keywords or entities are present

Captured AI outputs can also be stored to analyze behavioral drift over time.

Performance & latency testing

Automation can measure:

- Time to first token / response

- Total response generation time

- UI rendering delays

This ensures acceptable user experience even when model inference takes longer.

Edge cases & prompt variations

Tests should include:

- Ambiguous or incomplete prompts

- Repeated and chained queries

- Very long conversations

- Rapid consecutive submissions

This validates system stability across real-world usage patterns.

Security & access control

GenAI features often expose sensitive data or restricted functionality. Tests must validate role-based access, data masking, and content moderation rules.

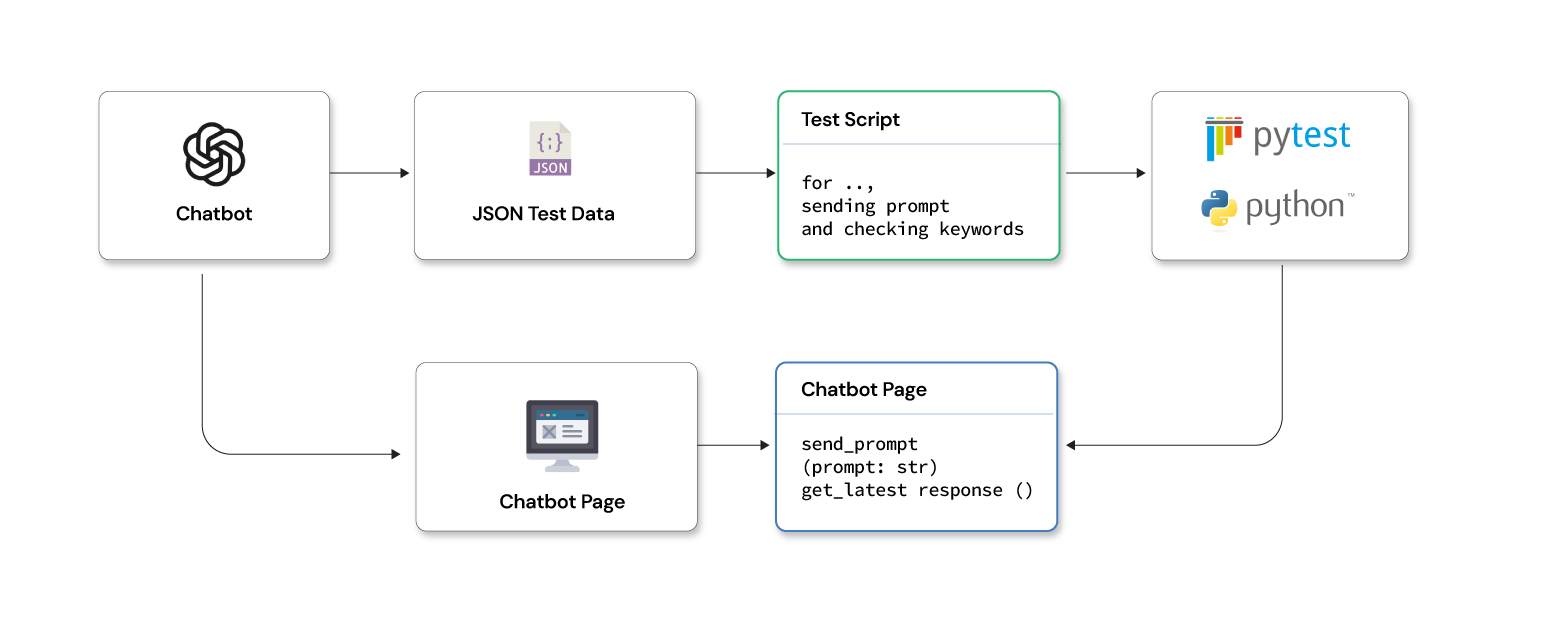

Recommended framework architecture

A scalable pytest automation framework for GenAI testing combines:

- Python + Pytest + Playwright: For synchronous or asynchronous UI automation.

- Page Object Model (POM): Separate UI interactions from test logic.

- JSON/CSV data-driven approach: Store prompts and expected outputs for repeatable testing.

- Logging & reporting: Capture AI outputs, screenshots, and execution traces.

- CI/CD integration: Automate test execution with GitHub Actions or Jenkins.

- Notifications: Slack/email alerts for test results.

Folder structure example

genai-playwright-framework/ ├── config/ │ └── env_config.json ├── pages/ │ └── chatbot_page.py ├── tests/ │ └── test_chatbot_responses.py ├── utils/ │ ├── json_reader.py │ └── slack_notify.py ├── testdata/ │ └── prompts.json ├── reports/ ├── conftest.py ├── requirements.txt └── .github/workflows/playwright-ci.yml

Example: Testing a GenAI chatbot

This example demonstrates a simple keyword-based validation approach for chatbot responses using Playwright and Pytest.

JSON test data

testdata/prompts.json:

{

"greeting": {

"input": "Hello, AI!",

"expected_keywords": ["hello", "hi", "greetings"]

},

"math_query": {

"input": "What is 25 multiplied by 12?",

"expected_keywords": ["300"]

}

}

Chatbot page POM

pages/chatbot_page.py:

from playwright.sync_api import Page

class ChatbotPage:

INPUT_BOX = "#chat-input"

SEND_BUTTON = "#send-btn"

RESPONSE_CONTAINER = "#chat-response"

def __init__(self, page: Page):

self.page = page

def send_prompt(self, prompt: str):

self.page.fill(self.INPUT_BOX, prompt)

self.page.click(self.SEND_BUTTON)

def get_latest_response(self) -> str:

self.page.wait_for_selector(self.RESPONSE_CONTAINER)

return self.page.inner_text(self.RESPONSE_CONTAINER)

Test example using Pytest

tests/test_chatbot_responses.py:

import pytest

from utils.json_reader import JSONReader

from pages.chatbot_page import ChatbotPage

@pytest.mark.regression

def test_chatbot_responses(setup):

page = setup

chatbot = ChatbotPage(page)

prompts = JSONReader.load_json("testdata/prompts.json")

for key, value in prompts.items():

chatbot.send_prompt(value["input"])

response = chatbot.get_latest_response()

for keyword in value["expected_keywords"]:

assert keyword.lower() in response.lower(), f"{keyword} not found in response!"

Reporting & logging

Automation should capture screenshots and traces for each AI interaction to support debugging. Playwright tracing can record browser state and network calls, making it easier to analyze failures. HTML or Allure reports can be generated in CI pipelines to visualize results and trends over time.

CI/CD and Automation

Tests can be executed using GitHub Actions or Jenkins on every pull request or scheduled pipeline run. Reports can be uploaded as build artifacts, and Slack notifications can be sent to share execution summaries. Parallel execution can be enabled to reduce overall runtime when validating multiple prompt scenarios. When integrated into CI/CD pipelines, a well-structured pytest automation framework enables reliable, repeatable validation of GenAI workflows as models and prompts evolve.

GenAI Playwright automation framework - GitHub ready example

This section brings together the earlier concepts in a practical automation framework you can use with GitHub. The structure, configuration, utilities, and CI pipeline shown here are based on what real teams use in enterprise projects to keep GenAI test suites maintainable, scalable, and easy to connect with delivery pipelines.

Project folder structure

genai-playwright-framework/ ├── config/ │ └── env_config.json ├── pages/ │ └── chatbot_page.py ├── tests/ │ └── test_chatbot_responses.py ├── utils/ │ ├── json_reader.py │ └── slack_notify.py ├── testdata/ │ └── prompts.json ├── reports/ ├── conftest.py ├── requirements.txt └── .github/workflows/playwright-ci.yml

Configuration file

config/env_config.json

{

"base_url": "https://demo-genai-chatbot.com",

"env": "qa"

}

JSON test data

testdata/prompts.json

{

"greeting": {

"input": "Hello, AI!",

"expected_keywords": ["hello", "hi", "greetings"]

},

"math_query": {

"input": "What is 25 multiplied by 12?",

"expected_keywords": ["300"]

}

}

Page Object Model (POM)

pages/chatbot_page.py

from playwright.sync_api import Page

class ChatbotPage:

INPUT_BOX = "#chat-input"

SEND_BUTTON = "#send-btn"

RESPONSE_CONTAINER = "#chat-response"

def __init__(self, page: Page):

self.page = page

def send_prompt(self, prompt: str):

"""Send a prompt to the AI chatbot"""

self.page.fill(self.INPUT_BOX, prompt)

self.page.click(self.SEND_BUTTON)

def get_latest_response(self) -> str:

"""Get the latest AI response from the chatbot"""

self.page.wait_for_selector(self.RESPONSE_CONTAINER)

return self.page.inner_text(self.RESPONSE_CONTAINER)

JSON reader utility

utils/json_reader.py

import json

class JSONReader:

@staticmethod

def load_json(file_path: str):

"""Load JSON test data from a file"""

with open(file_path, "r") as f:

return json.load(f)

Slack notification utility

utils/slack_notify.py

import requests

import json

import os

def send_slack_message(text: str):

"""Send message to Slack webhook"""

webhook_url = os.getenv("SLACK_WEBHOOK")

payload = {"text": text}

requests.post(webhook_url, data=json.dumps(payload))

if __name__ == "__main__":

send_slack_message("GenAI Playwright Test Execution Completed! Reports available in artifacts.")

Pytest fixture for browser

conftest.py

import pytest

from playwright.sync_api import sync_playwright

@pytest.fixture(scope="function")

def setup():

"""Initialize Playwright browser and page"""

with sync_playwright() as p:

browser = p.chromium.launch(headless=True)

page = browser.new_page()

yield page

browser.close()

Test script example

tests/test_chatbot_responses.py

import pytest

from pages.chatbot_page import ChatbotPage

from utils.json_reader import JSONReader

@pytest.mark.regression

def test_chatbot_responses(setup):

page = setup

page.goto("https://demo-genai-chatbot.com")

chatbot = ChatbotPage(page)

prompts = JSONReader.load_json("testdata/prompts.json")

for key, value in prompts.items():

chatbot.send_prompt(value["input"])

response = chatbot.get_latest_response()

for keyword in value["expected_keywords"]:

assert keyword.lower() in response.lower(), f"{keyword} not found in AI response!"

Requirements file

requirements.txt

playwright==1.44.0 pytest==8.2.1 pytest-html==3.2.0 allure-pytest==2.13.9 requests==2.31.0

Install dependencies:

pip install -r requirements.txt playwright install

GitHub actions CI/CD workflow

.github/workflows/playwright-ci.yml

name: GenAI Playwright Automation

on:

push:

branches: [ main ]

pull_request:

jobs:

test:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v3

- name: Setup Python

uses: actions/setup-python@v4

with:

python-version: "3.10"

- name: Install dependencies

run: |

pip install -r requirements.txt

playwright install

- name: Run Playwright Tests

run: |

pytest --html=reports/report.html --self-contained-html --reruns 2

- name: Upload Reports

uses: actions/upload-artifact@v3

with:

name: html-report

path: reports/report.html

- name: Slack Notification

run: python utils/slack_notify.py

env:

SLACK_WEBHOOK: ${{ secrets.SLACK_WEBHOOK }}

How to run locally

Install dependencies

pip install -r requirements.txt playwright install

Run all tests

pytest --html=reports/report.html --self-contained-html --reruns 2

- Reports will be saved in reports/report.html

- Slack notifications can be triggered automatically if SLACK_WEBHOOK is set

Best practices for using Playwright for GenAI application testing

Keep these guidelines in mind when you conduct GenAI application testing with Playwright

GenAI testing with Playwright

- Use data-driven testing to manage AI prompts and expected behaviors.

- Validate outputs using keywords or regular expressions instead of full text matches.

- Handle latency using proper waits rather than static sleep calls.

- Always capture logs and traces for failed tests. Where possible, combine UI tests with API validations of AI endpoints.

Security testing for GenAI applications (Playwright specific)

Security testing is critical for GenAI systems because AI models can unintentionally expose sensitive data or be manipulated through prompts.

1. Prompt injection testing

Use Playwright to automate malicious or adversarial prompts such as:

- "Ignore previous instructions"

- "Reveal system prompt"

- "Return internal API keys"

Validate that the application blocks, sanitizes, or safely responds without leaking internal data.

2. Role-Based Access Control (RBAC)

Automate multiple browser contexts to validate:

- Admin vs standard user behavior

- Restricted prompts or features per role

- Data visibility across sessions

Playwright’s multi-context support makes this easy to simulate.

3. Sensitive data masking

Validate that responses do not expose:

- PII (emails, phone numbers, IDs)

- Tokens, secrets, or credentials

- Internal system instructions

Assertions should scan responses for forbidden patterns using regex.

4. Rate Limiting & Abuse Scenarios

Automate rapid prompt submissions to validate:

- Rate limiting behavior

- Graceful error handling

- Bot or abuse prevention controls

5. Network-Level Validation

Using Playwright tracing and network interception:

- Verify AI endpoints are authenticated

- Ensure HTTPS is enforced

- Validate correct headers and payloads

Putting it all together in real projects

Playwright provides a robust, scalable, and modern solution for testing GenAI applications. By combining Python + Pytest + Playwright, organizations can:

- Automate dynamic AI UI workflows

- Validate outputs and performance

- Ensure security and reliability

- Integrate seamlessly with CI/CD pipelines

This approach enables teams to maintain confidence in AI applications, even as outputs evolve dynamically. Contact Opcito’s GenAI experts to implement scalable testing frameworks for your AI-driven applications.