Uncensored AI is not the threat; enterprise exposure is

Posted By

Abhijit Kharat

There’s a lot of talk about uncensored AI models being a security threat to businesses, and I get why people are concerned. But I think we’re looking at this the wrong way. The real problem isn’t the models themselves. It’s that companies are accelerating enterprise AI adoption without fully understanding or managing the risks it entails.

AI is everywhere now. Developers use it to write code faster, teams use it to automate decisions, and integrations happen almost automatically with fewer people checking the work. This is not just AI in cybersecurity anymore; it is AI inside everyday business workflows. When all of this happens without anyone really keeping track or setting guardrails, you end up with a massive blind spot that your existing security tools simply weren’t built to handle.

Unmanaged AI exposure is the real enterprise security risk

Today, enterprise security risk is less about what AI models can do and more about how widely and quietly AI is used across systems and workflows. This is one of the real risks of enterprise AI adoption. If organizations do not know where AI is generating code, making decisions, or integrating into pipelines, they cannot properly assess or control the risks.

This is also why discussions about enterprise AI risks vs uncensored AI often miss the point. Exposure grows not because tools are powerful, but because they are unmanaged. So, what is enterprise AI exposure? It is the gap between where AI is used and where security teams actually have visibility. Here are three trends that show what enterprise AI exposure looks like in practice and what security teams must address.

AI is lowering the skill barrier for attackers

Cyberattacks once required deep technical expertise, specialized tools, and significant preparation. AI compresses all three. With modern language models and even a basic uncensored AI tool, attackers can now generate phishing campaigns, reconnaissance scripts, exploit variations, and social engineering content through rapid iteration.

AI does not create malicious intent, but it removes friction. When experimentation becomes fast and cheap, attackers test more approaches and adapt more quickly. That leads to higher attack volume and greater variability, making detection harder and increasing pressure on defensive systems and analysts. These are real-world enterprise AI security risks, not theoretical ones.

For enterprises, this means threat activity scales faster than traditional security workflows can adapt, adding to existing enterprise LLM security challenges.

AI-generated vulnerabilities are entering codebases

AI-assisted coding is accelerating software delivery but also introducing subtle risks. Models generate code that appears correct and often passes functional testing, yet may contain insecure logic, unsafe defaults, or outdated dependency patterns. These are increasingly visible as enterprise AI adoption risks inside production systems.

Because AI-generated code blends seamlessly into human-written code, it is difficult to identify its origin during reviews. Over time, organizations lose visibility into how certain design choices were made and what assumptions they carry. This is one of the ways enterprises expose data through AI tools without realizing it.

The risk extends beyond code generation. Enterprises are increasingly adopting open-source models, plugins, and services when teams experiment with how to get uncensored AI capabilities for testing or automation. These components can introduce hidden vulnerabilities into the software supply chain, especially when security validation does not keep pace with adoption.

In many environments, AI artifacts enter production pipelines faster than security teams can assess their impact. This is also how enterprises lose data through LLMs due to misconfigured access, logging, and integrations.

AppSec and SecOps teams face growing operational pressure

As development velocity increases, security teams absorb the consequences. More releases, more integrations, and more automated workflows expand the attack surface while reducing clarity around ownership and intent. This is a major factor in why enterprise AI adoption is risky from an operational standpoint.

Security tools may detect issues, but understanding how and why they were introduced becomes harder. Was a vulnerability created by a developer, a model-generated snippet, or a third-party AI integration? Without that context, remediation becomes reactive rather than preventive.

AppSec and SecOps teams are not struggling due to a lack of skill. They are operating in environments where AI-driven change is outpacing governance, monitoring, and policy enforcement.

This mismatch creates a risk that cannot be solved by individual tools or isolated controls.

Why uncensored models are not the core threat

Restricting access to certain models may reduce some risks, but it does not address the broader exposure problem. Even highly controlled tools can introduce vulnerabilities if organizations lack visibility into how AI is used across teams and systems.

This is the answer to why uncensored AI is not the real risk. Shadow AI usage, unsanctioned integrations, and unmanaged code generation create risk regardless of model restrictions. The fundamental issue is not what AI can do, but what enterprises fail to monitor, validate, and govern.

Building enterprise AI governance into development and security pipelines

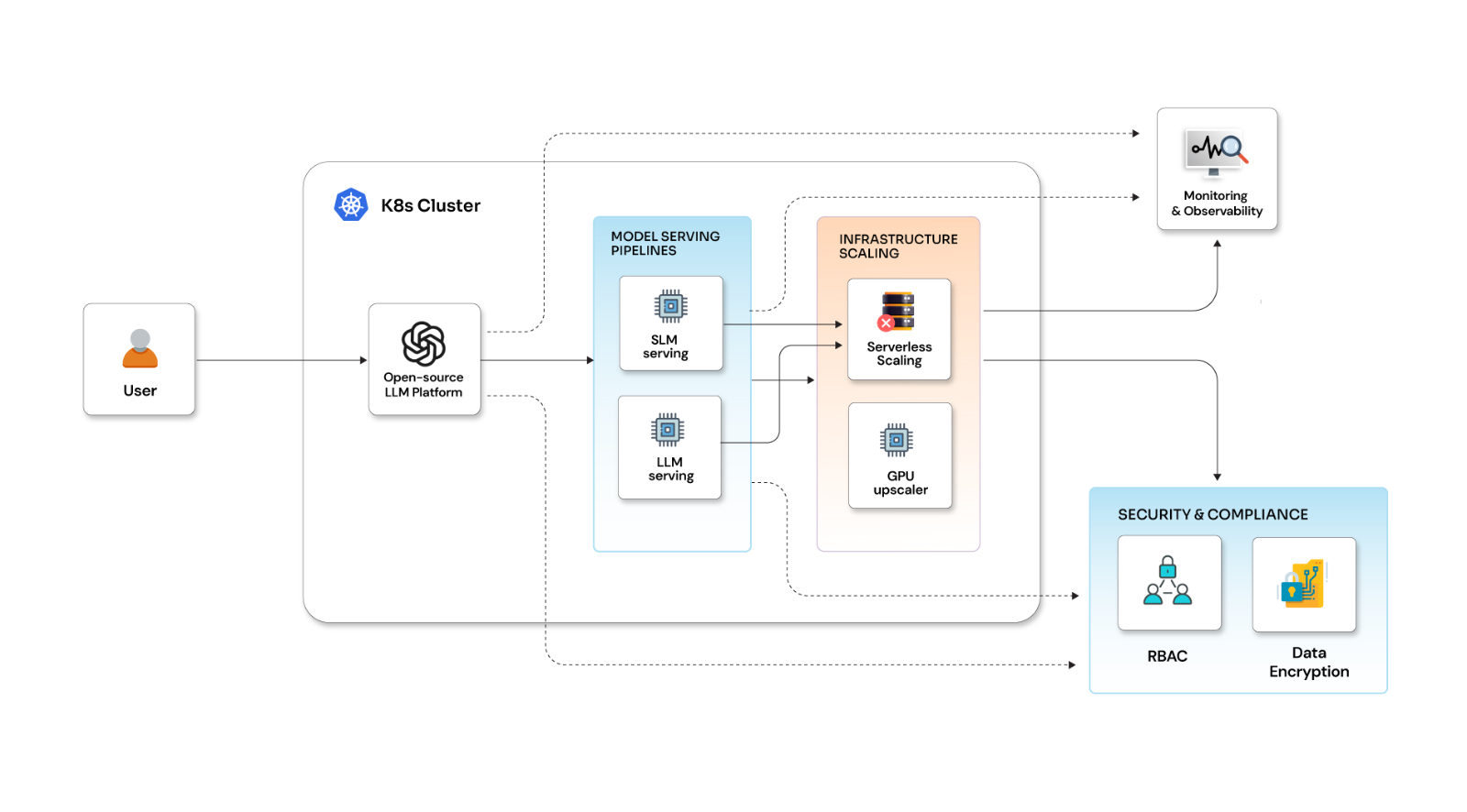

To manage AI-related risk, enterprises need more than policies and training. They need continuous visibility into how AI-generated artifacts enter development and production environments, and how they affect the security posture over time. This is where enterprise AI governance becomes operational, not theoretical.

This requires platforms and processes that treat AI output as part of the software and infrastructure supply chain, subject to the same scrutiny as code, dependencies, and configurations. It also requires shared responsibility across engineering, platform, and security teams.

Organizations that can connect AI usage, application behavior, and security controls can reduce risk without slowing innovation. Those who cannot will continue to discover problems only after they reach production.

Why unmanaged AI exposure will define the next wave of enterprise breaches

AI is transforming how enterprises build and operate systems. That transformation is not optional, and it is not slowing down. The real security risk is not uncensored models. It is adopting AI faster than enterprises can manage the exposure it creates. This is the core reason enterprise AI poses a security risk today.

The most dangerous AI in the enterprise is not the one without filters. It is the one no one is tracking.

Opcito supports enterprises in identifying unmanaged AI exposure, strengthening AppSec and SecOps workflows, and making sure security keeps pace as engineering teams scale. Speak with Opcito’s specialists to evaluate and manage your AI-driven risk.