LangChain vs LlamaIndex: Which one should you use and when

Posted By

Kedar Dhoble

When I first started building LLM-powered apps, two libraries consistently emerged: LangChain and LlamaIndex. At first, they seemed to be doing the same thing. But the deeper I explored, the more I realized that they solve very different problems and understanding that is the key to building better AI apps. In this blog, I'll break down what each library does, provide real-world use cases, and help you determine which one to use when, along with some code examples to make it all click.

- LlamaIndex is excellent when you want to connect LLMs to your own data (like PDFs, Notion, databases, etc.).

- LangChain is ideal for building chains, doing multi-step reasoning, and orchestrating agent-style workflows.

- You can, and must use both together.

What is LangChain?

LangChain is a framework for the logic and orchestration of large language models that lets you:

- Build multi-step chains (e.g., extract → calculate → summarize)

- Use tools like search engines , APIs, and calculators

- Manage memory, prompt templates, and agents

It's the go-to if your LLM needs to "do things” not just answer a one-off question.

For a deeper dive into how LangChain can help structure clean, object-based responses from LLMs, check out this guide on using LangChain for structured outputs.

When should you use LangChain

Use LangChain when:

- You need chained logic — e.g., extract → transform → analyze

- You're building agent-style apps (e.g., AI that uses tools or browses the web)

- You want more control over prompt engineering, memory, or dynamic flows

Example: Multi-step reasoning with LangChain

from langchain.chains import LLMChain, SequentialChain

from langchain.llms import OpenAI

from langchain.prompts import PromptTemplate

location_prompt = PromptTemplate.from_template("Give a short travel summary of {location}")

llm_chain1 = LLMChain(llm=OpenAI(), prompt=location_prompt, output_key="summary")

activity_prompt = PromptTemplate.from_template("Based on this: {summary}, suggest one fun activity.")

llm_chain2 = LLMChain(llm=OpenAI(), prompt=activity_prompt, output_key="activity")

overall_chain = SequentialChain(

chains=[llm_chain1, llm_chain2],

input_variables=["location"],

output_variables=["summary", "activity"]

)

result = overall_chain({"location": "Goa"})

print(result["activity"])

Takeaway: LangChain is perfect when your app needs to think in steps or use tools.

What is LlamaIndex?

LlamaIndex, previously known as GPT Index, assists large language models in communicating with your data to handle tasks like:

- Chunking and indexing your documents (PDFs, Markdown, SQL, etc.)

- Retrieving relevant data using semantic search

- Providing that information to the LLM for improved answers

If LangChain represents the brain's wiring, then LlamaIndex serves as its long-term memory.

To streamline your LLM deployment and management, check out Opcito's MLOps services for end-to-end machine learning operations.

When should you use LlamaIndex

Use LlamaIndex when:

- You have custom or private data (like PDFs, database rows, support articles)

- You want retrieval-augmented generation (RAG) — fetching relevant context before asking the model

- You care about how data is chunked, indexed, and retrieved

Example: Query a PDF with LlamaIndex

from llama_index.core import VectorStoreIndex, SimpleDirectoryReader

from llama_index.llms import OpenAI

documents = SimpleDirectoryReader("company_docs").load_data()

index = VectorStoreIndex.from_documents(documents)

query_engine = index.as_query_engine(llm=OpenAI(model="gpt-3.5-turbo"))

response = query_engine.query("What is the leave policy?")

print(response)

Takeaway: LlamaIndex shines when you want your LLM to "read" your data before answering.

Can you use LangChain and LlamaIndex together?

Absolutely, many production apps use LangChain and LlamaIndex together. You can use LlamaIndex to fetch relevant information, and then let LangChain handle logic, reasoning, or tool-use on top of that.

Example: LlamaIndex + LangChain

from langchain.chains import RetrievalQA

from langchain.llms import OpenAI

from llama_index.core import VectorStoreIndex, SimpleDirectoryReader

from llama_index.langchain_helpers.retrievers import LlamaIndexRetriever

documents = SimpleDirectoryReader("support_articles").load_data()

index = VectorStoreIndex.from_documents(documents)

retriever = LlamaIndexRetriever(index=index)

qa_chain = RetrievalQA.from_chain_type(

llm=OpenAI(),

retriever=retriever,

return_source_documents=True

)

result = qa_chain.run("How do I update my billing address?")

print(result)

Takeaway: The best of both worlds — LlamaIndex provides the facts, while LangChain manages the process.

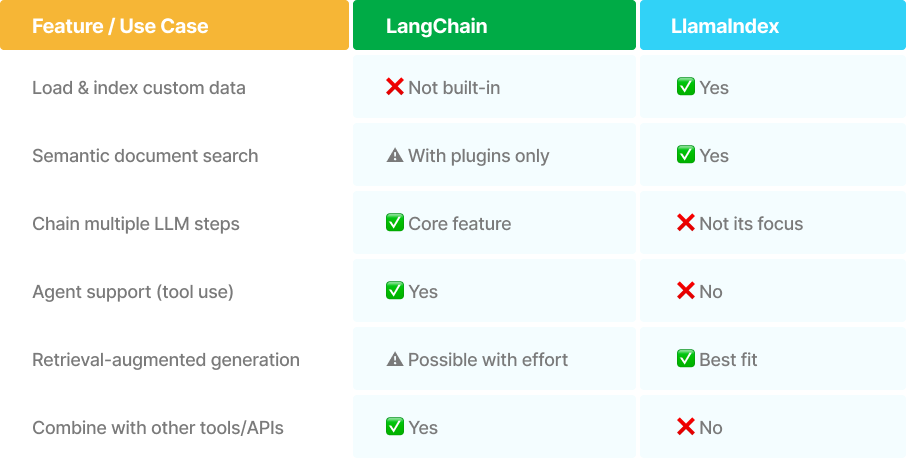

Comparing LlamaIndex vs LangChain

How to choose between LangChain and LlamaIndex?

Here’s how I personally decide:

- Just need your LLM to communicate with your data? → LlamaIndex

- Need reasoning, tools, and multi-step logic? → LangChain

- Want both intelligence + context? → Combine them

Don’t overthink it. These tools solve different problems, and they’re better together.

Final thoughts

Both LangChain and LlamaIndex are evolving fast. The community is growing, the documentation is getting better, and the integration keeps improving.

My advice? Experiment. Try both. Combine them.

Real-world LLM apps rarely fit into one neat box and neither should your tech stack. For expert guidance on building cutting-edge LLM-powered applications, explore Opcito's GenAI App Development services.

Have you built something cool with LangChain or LlamaIndex? Found an edge case where one outperformed the other? I’d love to learn how you’re using them. Need support with using LangChain and LlamaIndex? Contact Opcito’s experts to discuss a solution that best fits your business.

Related Blogs