KubeVirt – An Initial Look

Posted By

Colwin Fernandes

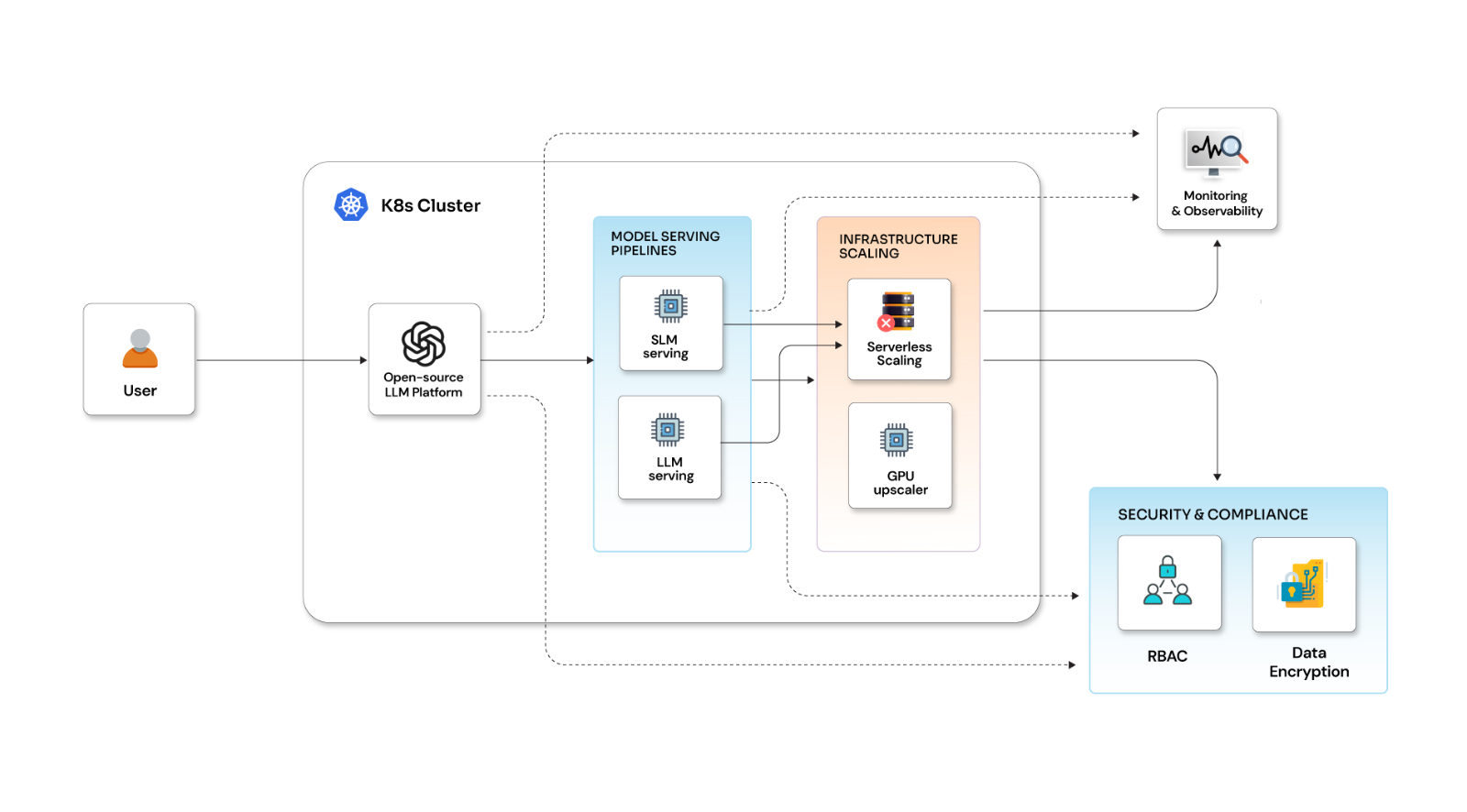

Kubernetes is a great solution when you want to run container workloads. The options and flexibility it affords you let you run your applications within containers with ease. This is great when you have applications that are easily containerized. But what if you have applications that aren’t easily containerized (legacy applications, enterprise software), but you’d still like to leverage the features that Kubernetes gives you?

These kinds of workloads are usually able to be run as VMs. Wouldn’t it be great if we were able to schedule VM based workloads with Kubernetes? Well, you can, with the KubeVirt add-on for Kubernetes. KubeVirt aims to be a virtual machine management add-on for Kubernetes and it allows administrators to run VMs alongside containers within their Kubernetes clusters. KubeVirt leverages the Custom Resource Definitions API to extend the Kubernetes API and add resource types for virtual machines and sets of virtual machines.

KubeVirt allows the VMs to run within regular Kubernetes Pods; this allows them to have access to standard items that all Pods have access to, like storage and networking, along with allowing them to be controlled and administered with standard Kubernetes tools like kubectl.

Custom Resource Definitions (CRDs)

Before diving into the KubeVirt architecture, let’s quickly touch on what CRDs are.

The Kubernetes API defines resources (endpoints) and actions on those endpoints. Custom Resource Definitions (CRDs) is a feature that allows users to extend the Kubernetes API with additional custom resources with their own names and schemas. Once applied to the API server in your cluster, the Kubernetes API will handle the serving and storage of your newly defined resources.

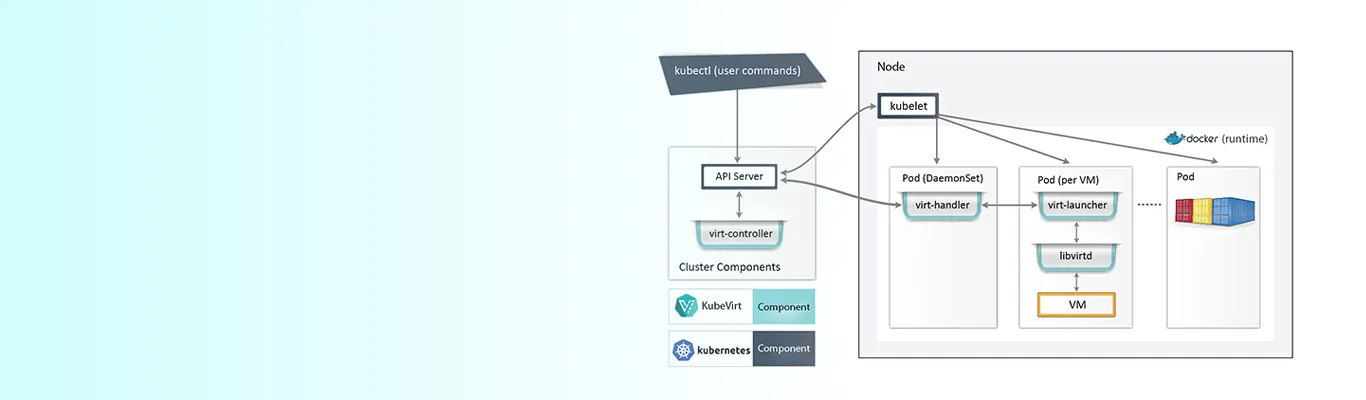

Now let’s have a look at the high-level architecture of the KubeVirt add-on and the CRDs that extend the functionality of the base Kubernetes API to enable these features.

KubeVirt Components

The primary CRD for KubeVirt is the VirtualMachine (VM) resource. This resource defines all the properties of the VM, like the CPU, RAM, number, and type of NICs available to the VM.

Learn how to deploy and manage VMs on Kubernetes in this practical guide.

virt-controller

KubeVirt comes with a custom Kubernetes Operator that handles the virtualization functionality within the cluster. When new VM objects are created, the virt-controller creates the pod within which the VM will run. Once the pod is scheduled to a particular node, the virt-controller updates the VM object with the node name it is scheduled on and hands over control to a node-specific KubeVirt component called the virt-handler that is running on each node within the cluster.

virt-handler

The virt-handler takes over from the virt-controller once the pod is scheduled to a particular node in the Kubernetes cluster. Then the virt-handler performs the necessary operations to change the VM to meet the desired state. It is responsible for referencing the VM spec and creating the corresponding domain via a libvirtd instance in the VMs pod. It also deletes the domain when the pod is deleted from the node it is running on.

virt-launcher

KubeVirt creates one pod for every VM object that is created. The primary container in the pod runs the virt-launcher. This component provides the cgroups and namespaces needed for the VM process. The virt-handler passes on the VM’s CRD to the virt-launcher, and virt-launcher then uses a local libvirtd instance in its container to start the actual VM. Virt-launcher keeps an eye on the VM process and will terminate once the VM has exited. There are situations where the Kubernetes runtime could attempt to shut down the virt-launcher pod before the VM process has exited. When this happens, the virt-launcher will pass the kill signal to the VM process and attempt to hold off termination of the pod till the VM process is gracefully shutdown.

Libvirtd

An instance of libvirtd is deployed with each VM pod. The virt-launcher manages the lifecycle of the VM process via libvirtd.

Now that we have an idea of the moving pieces within the add-on, let’s have a look at how the two important aspects of running non-trivial containers in Kubernetes Storage and Networking work. KubeVirt VMs can be configured with disks that are backed by Kubernetes volumes.

Storage

Persistent Volume Claim volumes can make Kubernetes persistent volumes available as disks to the VMs deployed via KubeVirt. There is a limitation at the moment that the persistent volumes need to be iSCSI block devices but there is progress being made to enable file-based persistent volume disks.

Ephemeral Volumes are also supported and are dynamically generated. They are associated with the VM when the VM starts and are discarded when the VM stops. Currently, these are backed by Persistent Volume Claim volumes.

Registry Disk volumes are also supported. These volumes reference a docker image that embeds a qcow or raw disk and are pulled from a container registry. Just like ephemeral images, the data in these disks only persists for the life of the container.

CloudInit NoCloud volumes are a way to provide VMs with a user-data source which is added as a disk to provide configuration details to guests with Cloud-Init installed. The details can be provided in clear text, base64 encoded, or via Kubernetes Secrets.

Networking

Since KubeVirt leverages standard Kubernetes Pods to run the VMs, all the basic network functionality is available out of the box to each KubeVirt VM. Specific ports on TCP and UDP can be exposed to consumers outside the cluster via regular Kubernetes services with no special networking considerations or configuration needed.

So, what are you waiting for? Go check out KubeVirt yourself! The user guide is available here

https://kubevirt.gitbooks.io/user-guide/ is a great start.

Related Blogs