Introduction to Persistent Volumes and mounting GCP Buckets on Containers

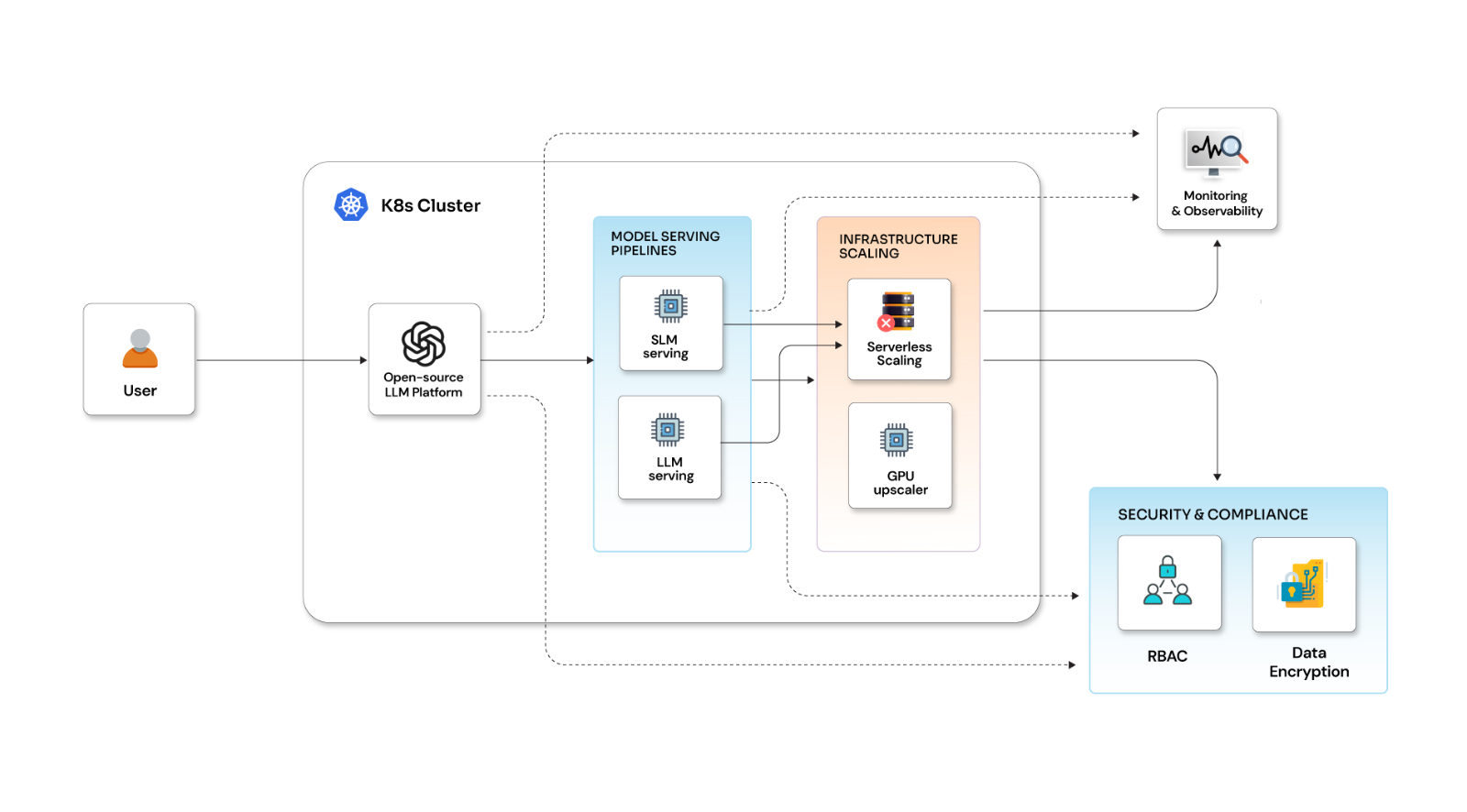

Posted By

Chetan Kolhe

The global application container market has been on the up and is expected to go beyond $8 billion by 2025. Almost all major players are trying their best to capture this market by introducing feature-rich services. Google Cloud Platform (GCP) is one such player that provides services for everything from development to production, from container builder, registry, and optimized OS to Kubernetes engine for orchestration. Google Kubernetes Engine (GKE) is the best tool to manage containerized applications using Google infrastructure that provides a managed environment for deploying, managing, and scaling. GKE works on the Kubernetes cluster, and when you try to run services on Kubernetes, it is advised to preserve the data of those services that are required at the time of a catastrophe or important data that can be needed in later stages. To store important data, Kubernetes uses persistent volumes.

So, what is a Persistent Volume (PV)?

A persistent volume is a cluster-wide resource used to store data. The method used to store data is such that it continues beyond the lifetime of a pod as well. On a worker node, the Persistent Volume doesn’t get backed by locally-attached storage. But, it gets backed by a networked storage system like EBS, NFS, or a distributed file system like Ceph. Persistent Volumes run independently, which means data stored within persistent volumes continue to exist even if pods are deleted or recreated. Persistent Volumes are provisioned by Persistent Volume Claims (PVC), and it is a request for the platform when you want to create a PV. You can also attach PVs to your pods through a PVC. Pods use PVCs as Volumes. The cluster inspects the PVC to figure out the bound volume. It also mounts the volume for the pod.

Persistent Volumes support the following access modes:

- ReadWriteOnce: Volume can be mounted as read-write by a single node.

- ReadOnlyMany: Volume can be mounted as read-only by many nodes.

- ReadWriteMany: Volume can be mounted as read-write by multiple nodes.

What are the challenges with Persistent Volumes on GKE for simultaneous ReadWrite on multiple nodes?

Persistent Volume (PV) is a cluster-wide resource and is used to store data that can be retained even if the associated pod is not there. In a typical business application, there could be complex data pipelines that continuously process raw data to produce business outputs. To process this data parallelly, there are multiple pods involved in the setup. Now, these multiple pods will need to download the raw files from the blob storage i.e. bucket, and process it. These multiple pods will need continuous access to the raw data and will use high CPU power. This means the pods will be scheduled on different nodes. Most of us will use the ReadWriteMany access mode. However, the PV created in the Compute Engine of GCP will face problems in supporting ReadWriteMany access mode.

So, how do you solve this problem?

The usual answer is using Google Filestore and Network File System Server.

Google Filestore: Google Filestore is developed for large-scale file system use cases, and the minimum size of an instance is 1TB. So, if you have a smaller amount of data I would recommend not going for this one because it will be too expensive in that case.

Network File System (NFS) Server: Network File System works perfectly for sharing data across multiple pods running on different nodes. The only problem with NFS Server is that it has a very slow read/write operation rate. Also, it takes 1 CPU core to run, which is a little too much if you don’t have a bigger cluster.

So, if you are running short of budget and CPU cores, then how do you solve the aforementioned problems? The perfect solution would be to mount the GCP bucket on containers. Mount the bucket on each running pod at a particular location to solve the problem of ReadWriteMany access mode. Also, it does not require extra CPU or memory to run and increases the speed of the read/write operations. Before jumping on to the actual process to use GCP buckets, let’s see what GCP Buckets are and how Cloud Storage Fuse can help you solve the problems.

GCP Buckets: These are the basic resources that hold the data. Everything that is stored in Cloud Storage is contained in a bucket. You can use the buckets to organize the data as per the requirements and provide controlled access to the data.

Cloud Storage Fuse: It is an open source FUSE adapter that allows you to mount Cloud Storage buckets as file systems on your systems. It also assists the applications in uploading and downloading Cloud Storage objects. If you are connected to Cloud Storage, then you can run Cloud Storage FUSE anywhere.

To install the gcsfuse follow the following steps:

-

echo "deb http://packages.cloud.google.com/apt gcsfuse-jessie main" | tee /etc/apt/sources.list.d/gcsfuse.list

-

curl https://packages.cloud.google.com/apt/doc/apt-key.gpg | apt-key add -

-

apt-get update && apt-get -y install gcsfuse

Now coming to the actual solution, i.e., mounting GCP buckets. So, how do you mount GCP buckets? Simple, here are the steps involved:

- mkdir /path/to/mount/point

- gcsfuse my-bucket /path/to/mount/point

Just in case, if you want to unmount the GCP bucket, follow the path, fusermount -u /path/to/mount/point

How to Mount GCP Bucket inside containers?

The bucket should be mounted before executing the main application and unmounted before the container gets destroyed. To achieve this, use postStart and preStop events. To mount the GCP bucket inside the container, run the container in privileged mode. For a container to start the deployment, the file should contain the required configuration with a Google Cloud Storage bucket mounted in a given path.

If the container wants access to /dev/fuse you should run the container with SYS_ADMIN capabilities.

securityContext:

privileged: true

capabilities:

add:

- SYS_ADMIN

As you are not using any Kubernetes volumes, you can implement this by using lifecycle directives. postStart will mount the gcsfuse volume, and preStop will unmount the gcsfuse volume.

lifecycle:

postStart:

exec:

command: ['sh', '-c', 'gcsfuse my-bucket /path/to/mount/point']

preStop:

exec:

command: ['sh', '-c', 'fusermount -u /path/to/mount/point']

For this to work properly, you will need to install GCSFUSE inside the container. This means the container has to be built with the GCSFUSE. Once the required container is built accurately, then it is easy to mount the GCP bucket on containers. In this blog, I tried to explain how you can successfully mount a GCP bucket on containers, from explaining the persistent volumes to problems faced with the persistent volumes on GKE. The blog focuses on giving the right solutions to the problems that you might come across while following the mounting procedure in different scenarios. Let me know your thoughts on this or any other problems that you are facing in the comments section below. Till then, happy coding.