Complex Infrastructure Management with Terraform and Bash Script as Wrapper

Posted By

Shadja Chaudhari

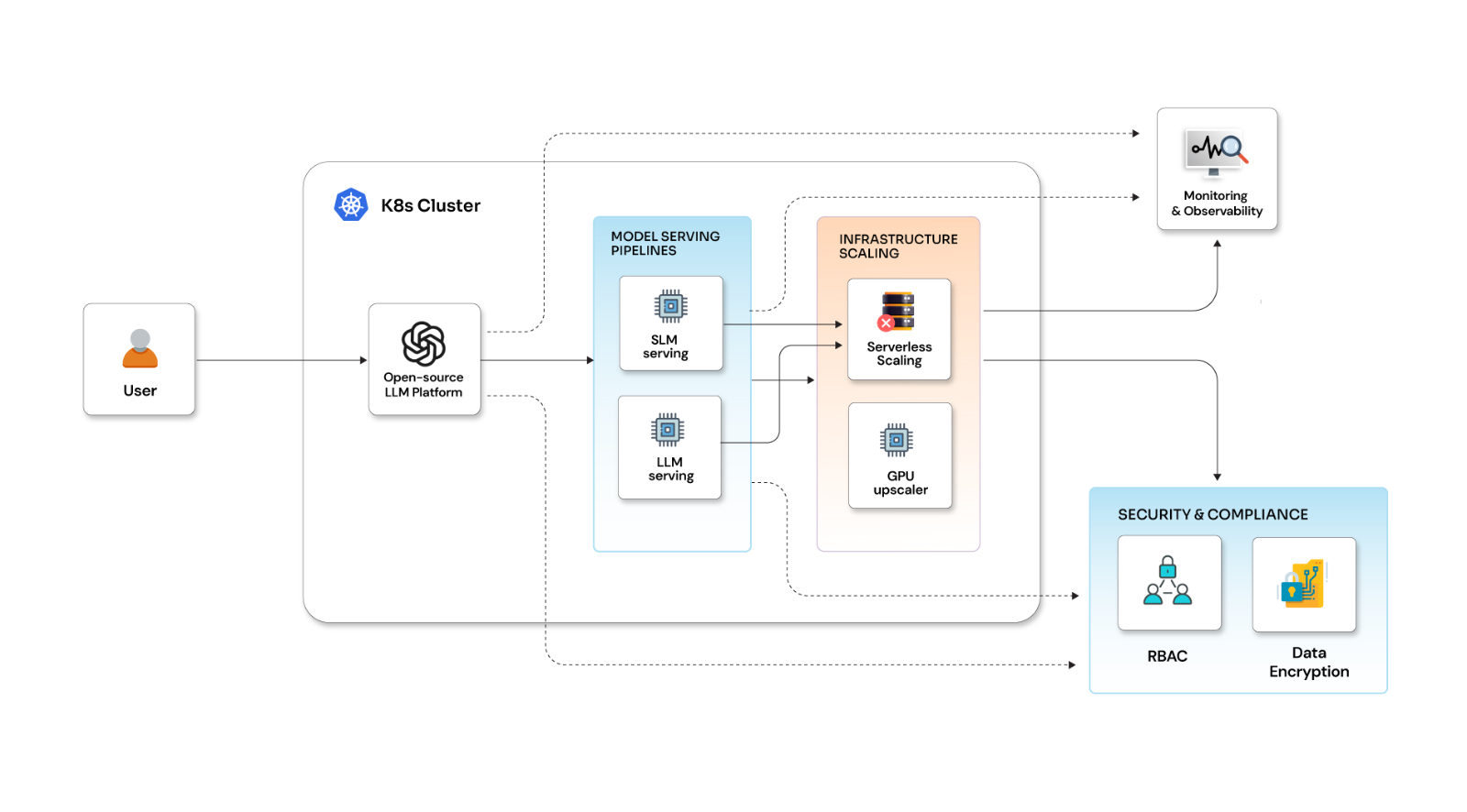

Writing infrastructure-as-code for an environment having a variety and number of cloud resources would be the most crucial job in infrastructure automation. For such complex environments, it becomes necessary to have infrastructure-as-code readable and flexible. This facilitates understanding the infrastructure quickly. Terraform is an infrastructure-as-code software by Hashicorp which provides users a systematic way to define infrastructure configuration and execute in a desirable way. This makes Terraform a go-to application for infrastructure-as-code.

We at Opcito are working on a product that is hosted on the AWS cloud. In the initial stages, the problem with the product was that the architecture was lagging a few steps behind microservices-based architecture (no containers yet!). This resulted in 12-13 servers, some auto-scaling groups with and without a load balancer, and close to 30 security groups designed on the basis of type of traffic (hence a minimum of 5 security groups were required by each EC2 server to have a unique set of rules to open minimal traffic), various IAM roles and profiles, and 3-4 databases in an environment. And most of us would agree that this is definitely not a perfect environment to start with!

The first thing that comes to mind is ‘use terraform modules’. Well, we tried using modules, and we couldn’t meet all the acceptance criteria. While working on the above use case, some of the acceptance criteria really set the boundary for our work in Terraform. Afterall, it's always about what the client wants and how well we can align it with the sane practices!

Observations/Acceptance Criteria:

- Terraform templates are to be reused (Do I really need to mention this? :P).

- Terraform state files are to be maintained separately for each resource.

- Terraform commands can be applied to each resource solely without affecting any other resource; e.g., terminating an EC2 server that hosts service A should not affect the EC2 server which hosts service B.

- S3 backend config will be different for different environments; e.g., bucket name for staging is "terraform-states-staging" and for prod "terraform-states-production".

- Independent resources will be created first, e.g., ALB, security groups, IAM roles, etc., and these will be used by dependent resources, e.g., ALB will be used by auto-scaling groups, security groups will be used by EC2, ALB, auto-scaling groups.

-

Independent and dependent resources will never be created in a single Terraform template.

resource "aws_autoscaling_group" "asgsg" {} resource "aws_security_group" "sg"This template won’t be accepted because security groups will be shared between EC2 and auto-scaling. Hence it can’t be declared either in auto-scaling or in the EC2 template.

- Easy code maintenance (Obviously!).

- Provision to create one single resource if required.

- Bash script as a wrapper for Terraform commands.

So, before getting started with the Terraform structure, there are certain questions that we need to answer. Following is a set of imperative questions; answers to these will help in getting the right Terraform structure:

- How many non-identical resources of the same resource-type per environment?

ans: In our case - 4 EC2s, 4 Autoscaling Groups, 2 Relational Database Services (RDS), and 1 Redshift Cluster.cue: The number 4, in case of EC2, indicates there will be 4 sets of values of variables. In other words, there will be 4 different *.tfvars files, one of which, at a time, can be passed as “-var-file” argument for Terraform apply command.

Look for sample files service_A.tfvars and service_B.tfvars.

- How many types of resources per environment?

ans: EC2, Autoscaling, ALB, RDS, security groups, Redshift, IAM roles.

cue: Suggests the number of Terraform templates to be created.

- What are the dependent and independent components of the environment?

ans: security groups, and IAM roles are needed by all other resources.

cue: These need to go to separate state files (can be clubbed in the same state) as these will be destroyed at last but created first. They will be global variables; hence, need to go in global.tfvars file. (They may appear as 'data resources' in the Terraform templates.)

- How many environments?

ans: 3 viz., staging, poc, and prod.

cue: Represents the number of S3 buckets required for maintaining states files. All the parameters whose values are different for different environments will be of type map, and environments will be the keys of the maps. Look for variable “attributes” in service_A.tfvars or service_B.tfvars. In short, service_A.tfvars and service_B.tfvars have same keys in maps but different values because both are going to use the same Terraform template.

One of the possible Terraform structures would be:

##

|---EC2

|---main.tf

|---variables.tf

|---output.tf

|---autoscaling_group

|---main.tf

|---variables.tf

|---output.tf

|---alb

|---main.tf

|---variables.tf

|---output.tf

|---security_groups

|---main.tf

|---variables.tf

|---output.tf

|---iam_roles

|---main.tf

|---variables.tf

|---output.tf

|---global.tfvars ………(for security groups, alb and iam_roles and other command variables)

|---service_A.tfvars

|---service_B.tfvars

|---run_terraform.sh

Code snippets:

EC2/main.tf

terraform { required_version = "> 0.9.0"

backend "s3" { #environment specific bucket to store state files }

}

provider "aws" { #provide access key,secret key and region }

resource "aws_instance" "ec2"

{

instance_type = "${var.attributes["${var.environment}.instance_type"]}"

subnet_id = "${var.attributes["${var.environment}.subnet_id"]}" }

}

Note: For simplicity, only two arguments instance_type and subnet_id are shown in the example.

EC2/variables.tf

variable "attributes" {

type = "map"

description = "Key Value pair map of attributes required for creating EC2 instance"

}

variable "environment" {

type = "string"

description = "environment for which EC2 instance is being created"

}

global.tfvars

# Instance Profile to be associated with instances

iam_instance_profile =

{

poc = "poc-instance-profile"

staging = "staging-instance-profile"

production = "production-instance-profile"

}

# Public Key Name used while creating the instances

# Instance Profile to be associated with instances

iam_instance_profile =

{

poc = "poc-instance-profile"

staging = "staging-instance-profile"

production = "production-instance-profile"

}

# Public Key Name used while creating the instances

key_name="terraform"

# Base AMI name prefix to identify latest AMI

base_image_name_prefix =

{

poc = "staging-baseimage"

staging = "staging-baseimage"

production = "production-baseimage"

}

alb =

{

poc.alb_name = "poc-alb"

poc.subnets = "subnet-100000,subnet-200000,subnet-30000"

staging.alb_name = "stag-alb"

staging.subnets = "subnet-10001,subnet-20001,subnet-3001"

production.alb_name = "prod-alb"

production.subnets = "subnet-10002,subnet-20002,subnet-3002"

}

# key value pair maps of all security groups in all environment

all_security_groups =

{

# For AWS EC2

# For web apps

poc.ec2_web_external_sg_name = "va-o-ec2-1-web-xxx"

staging.ec2_web_external_sg_name = "va-s-ec2-1-web-xxx"

production.ec2_web_external_sg_name = "va-p-ec2-1-web-xxx"

poc.ec2_data_internal_sg_name = "va-o-ec2-0-dat-xxx"

staging.ec2_data_internal_sg_name = "va-s-ec2-0-dat-xxx"

production.ec2_data_internal_sg_name = "va-p-ec2-0-dat-xxx"

}

service_A.tfvars

attributes =

{

poc.instance_type="t2.large"

poc.subnet_id="subnet-12345"

production.instance_type="x1.16xlarge"

production.subnet_id="subnet-67890"

staging.instance_type="t2.medium"

staging.subnet_id="subnet-111213"

}

service_B.tfvars

attributes =

{

poc.instance_type="t2.small"

poc.subnet_id="subnet-102030"

production.instance_type="x1.large"

production.subnet_id="subnet-607080"

staging.instance_type="t2.large"

staging.subnet_id="subnet-111213"

}

And lastly, the wrapper bash script logic should be such that the following command would create the required resources for service_A in staging environment.

bash run_terraform.sh -e staging -c service_A -apply

This bash script should:

- Initialize S3 backend with partial configuration

- Choose 'service_A.tfvars' as an external var file and perform ‘terraform apply’ from the proper directory, i.e., EC2 in this case for service_A (A new service might need auto-scaling group, then 'terraform apply' should be fired from 'autoscaling_group' folder). We added this mapping in the bash script - 'name_of_service/resource': 'terraform_target_directory'

"service_A": "autoscaling", "security_groups": "security_groups", "iam_role": "iam_role", "service_B": "ec2", "service_C": "autoscaling", "alb": "alb"

- Take care of ‘terraform get’ and ‘terraform init’ as well.

Following set of commands will create a staging environment having infrastructure for service_A and service_B.bash run_terraform.sh -e staging -c security_groups -apply bash run_terraform.sh -e staging -c iam_role -apply bash run_terraform.sh -e staging -c service_A -apply bash run_terraform.sh -e staging -c service_B -apply

To sum up, this solution does not use a modular structure exactly. Instead, we leveraged 'interpolation and maps' to control input arguments to the Terraform templates. And yes, a powerful bash script as wrapper, indeed, made life easy. We could have used ‘terraform modules’ for the above use case but only with the burden of multiple folders and mention of loads of variables twice in the source section of 'module' and in the root module. To conclude, I would say, 'Terraform is as simple as it looks and as complicated as you make it!'

Related Blogs