6 OpenStack Deployment tools that are awesome for your project

Today, working in software production often comes down to continuous deployments and operating an environment distributed all over the world. Especially with the on-demand cloud services in the picture. With mighty backers in the industry like Redhat and Rackspace, OpenStack Cloud technology is definitely here to stay. However, with the myriad of options available for deployment, how do you go about choosing a tool for your project? Some of the major considerations involve choosing the correct model for the tool (agent-based architecture or not?), the structure of your environment (different tools depend on different languages and require specific OSes for support/setup), etc.

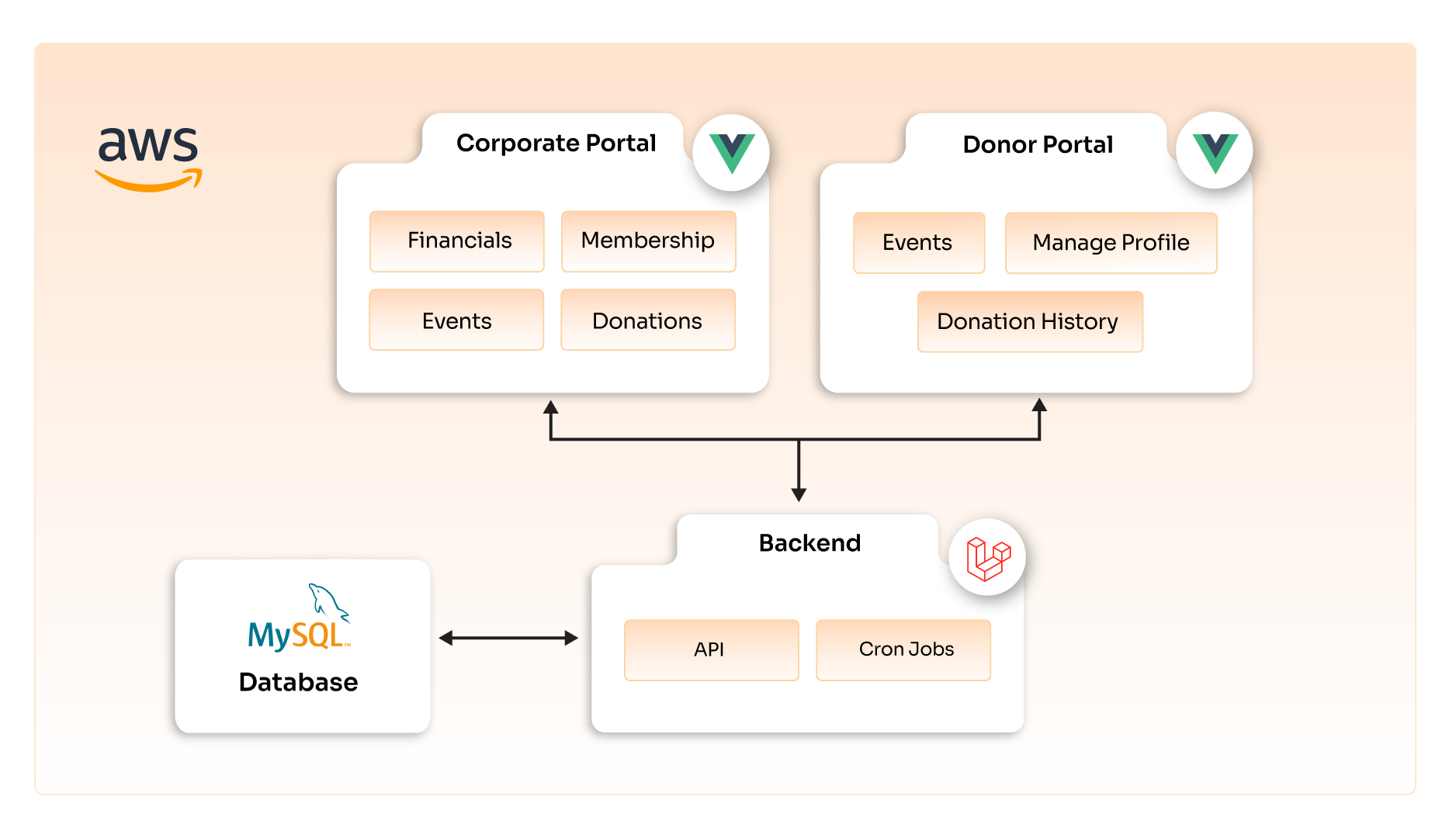

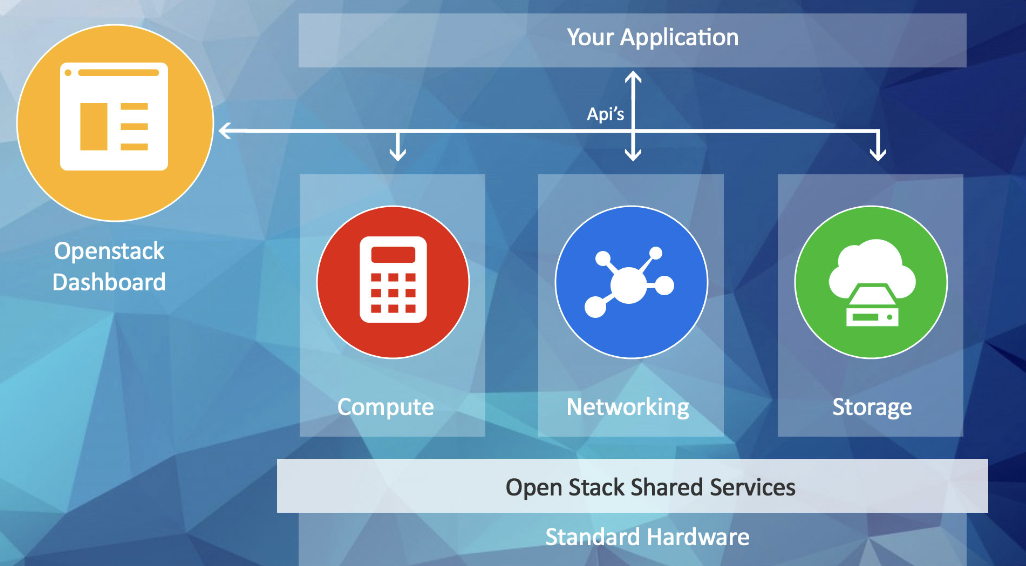

Let’s take a quick sneak-peak at cloud options OpenStack offers before we go any further.

OpenStack is an open source platform that lets you build an Infrastructure as a Service (IaaS) cloud that runs on commodity hardware. The technology behind OpenStack consists of a series of interrelated projects delivering various components for a cloud infrastructure solution.

Having said that, I think that the most crucial point is that OpenStack is more than just a product; it is a completely diverse ecosystem with strong foundations behind it. As such, it should not be measured as run-of-the-mill product software. What does this mean and how does why does this make a difference to the end user?When there is a common baseline of infrastructure, it's much easier for an entire industry to plug into it and support it individually. This applies to all of the layers of the stack, starting from storage and network through more high-level services such as big data services and even analytics.

One of the advantages of OpenStack is that it is designed for horizontal scalability, so you can easily add new Compute, Network, and Storage resources to grow your cloud over time.

A critical part of a cloud's scalability is the amount of effort that it takes to run your cloud. To minimize the operational cost of running your cloud, setup and use an automated deployment and configuration infrastructure with a configuration management system.

An automated deployment system installs and configures operating systems on new servers, without intervention, after the absolute minimum amount of manual work, including physical racking, MAC-to-IP assignment, and power configuration.

Now that we’ve seen what OpenStack cloud entails, it is time to consider the next question: What exactly is your requirement with respect to deployment models and tool considerations? The three most popular deployment models are on-premise distribution, hosted private cloud, and OpenStack as a service. Currently, the hosted private cloud model is gaining favor among enterprises as the control over the actual infrastructure provides a much-needed competitive edge while also providing flexibility over the size of the cloud.

After deciding on a deployment model, we move forward to choosing a deployment tool as dictated by the product requirements.

Below are some popular tools to deploy an OpenStack distribution:

Chef:

Chef is an automation framework that makes it easy to deploy servers and applications to any physical, virtual, or cloud location, no matter the size of the infrastructure. As that suggests, Chef is a product focused on its developer user base.

Each organization is comprised of one (or more) workstation, a single server, and every node that will be configured and maintained by the chef-client. Cookbooks (and recipes) are used to tell the chef-client how each node in your organization should be configured. Furthermore, since Chef is centered around Git, it provides excellent version control capabilities.

There are a number of configuration options available, including block storage, hypervisors, databases, message queuing, networking, object storage, source builds, and so on. The currently supported deployment scenarios include All-in-One Compute, Single Controller + N Compute, and Vagrant.

Chef has an agent-based architecture that requires a client on each VM or server instance while being controlled by a central master agent. Chef also enjoys extensive support in the form of an abundance of cookbooks and documentation.

Chef uses Ruby as its reference language, so Ruby is a prerequisite to using Chef. There may be a steep learning curve to actually get to using Chef if one is not familiar with Ruby and procedural languages. One of the major cons of this system is that it does not support the push functionality. This IT automation and configuration tool is well suited to development-centric Infrastructure projects.

OpenStack Chef Repository http://github.com/opscode/openstack-chef-repo

Ansible OpenStack

Ansible is another popular infrastructure automation tool. It can configure systems, deploy software, and orchestrate more advanced IT tasks such as continuous deployments or zero downtime rolling updates.

Ansible’s main goals are simplicity and ease of use. It also has a strong focus on security and reliability, featuring a minimum of moving parts, usage of OpenSSH for transport (with an accelerated socket mode and pull modes as alternatives), and a language that is designed around audibility by humans–even those not familiar with the program.

OpenStack-Ansible is an official OpenStack project which aims to deploy production environments from source in a way that makes it scalable while also being simple to operate, upgrade, and grow.

Another major advantage of using Ansible is its agent-less architecture. Due to this property, there is no prior need to configure the VM or workstation instances, Ansible can work directly with them from the command line. Furthermore, the custom module can be in any language as long as the output is in JSON format.

However, there is also a major drawback to working with Ansible. The playbooks offer support. However, it is limited. Also, it is not efficiently supported on all OSes.

Ansible, with its easy deployment and uncomplicated playbooks, appeals to system administrators for managing system and cloud infrastructure.

Puppet and OpenStack:

Puppet Enterprise helps you get started with OpenStack on Day One, and helps you scale your cloud as your organization's needs increase. It's the most complete in terms of available actions, modules, and user interfaces. Puppet is more similar to Chef than it is to Ansible in quite a few aspects. Puppet also follow agent based architecture and is more focused on development centric projects.

All modules and configurations are built with a Puppet-specific language based on Ruby, or Ruby itself, and thus will require programmatic expertise in addition to system administration skills.

With the OpenStack Puppet Modules project maintained by the OpenStack community, you can use Puppet Enterprise to deploy and configure OpenStack components themselves, such as Nova, Keystone, Glance, and Swift.

Puppet Enterprise allows for real-time control of managed nodes using prebuilt modules and cookbooks present on the master servers. The reporting tools are well developed, providing deep details on how agents are behaving and what changes have been made.

SALTSTACK OpenStack

Salt, a new approach to infrastructure management, is easy enough to get running in minutes, scalable enough to manage tens of thousands of servers, and fast enough to communicate with those servers in seconds. It can be installed through Git or through the package management system on masters and clients. Clients make a request to a master server, which, when accepted on the master, allows that minion to be controlled.

The Salt system is amazingly simple and easy to configure; the two components of the Salt system each have a respective configuration file. The salt-master is configured via the master configuration file, and the salt-minion is configured via the minion configuration file.

As with Ansible, you can issue commands to minions directly from the CLI, such as to start services or install packages, or you can use YAML configuration files, called "states," to handle more complex tasks. There are also "pillars," which are centrally located sets of data that states can access while running.

You can request configuration information -- such as kernel version or network interface details -- from minions directly from the CLI. Minions can be delineated through the use of inventory elements, called "grains," which makes it easy to issue commands to a particular type of server without relying on configured groups. For instance, in a single CLI direction, you could target every minion that is running a particular kernel version.

Custom modules can be written in Python or PyDSL. Salt does offer Windows management as well as Unix, but is more at home with Unix and Linux systems.

Salt's biggest advantage is its scalability and resiliency. You can have multiple levels of masters, resulting in a tiered arrangement that both distributes load and increases redundancy. Upstream masters can control downstream masters and their minions. Another benefit is the peering system that allows minions to ask questions of masters, which can then derive answers from other servers to complete the picture. This can be handy if data needs to be looked up in a real-time database in order to complete a configuration of a minion.

OpenStack Autopilot:

OpenStack Autopilot is one of the easiest ways to build and manage an OpenStack private cloud. This Canonical OpenStack installer boasts of choice of storage and SDN technologies, high-performance cloud architecture, high scaling capabilities, and support for major vendor servers. Autopilot works in a very simple manner which involves using MAAS to register your machines, selecting your OpenStack configuration and adding your hardware.

Metal as a Service – MAAS – lets you treat physical servers like virtual machines in the cloud. Rather than having to manage each server individually, MAAS turns your bare metal into an elastic cloud-like resource.

Fuel OpenSatck:

Fuel is an open source deployment and management tool for OpenStack. Developed as an OpenStack community effort, it provides an intuitive, GUI-driven experience for deployment and management of OpenStack, related community projects and plug-ins.

Fuel comes with several pre-built deployment configurations that you can use to immediately build your own OpenStack cloud infrastructure

Create the following cluster types:

1. Single node: install an entire OpenStack cluster on a single physical or virtual machine.

2. Multi-node (non-HA): Allows additional OpenStack services such as Cinder, Quantum, and Swift without requiring the degree of increased hardware involved in ensuring high availability

3. Multi-node (HA): create an OpenStack cluster that provides high availability, With three controller nodes and the ability to individually specify services such as Cinder, Quantum, and Swift.

Conclusion

On one hand, Puppet and Chef will appeal to developers and development-oriented shops, Salt and Ansible are much more attuned to the needs of system administrators. Ansible's simple interface and usability fit right into the sys admin mindset, and in a shop with lots of Linux and Unix systems, Ansible is quick and easy to run right out of the gate.

Salt is the sleekest and most robust of the four, and like Ansible, it will resonate with sys admins. Highly scalable and quite capable, Salt is lugged down only by the Web UI.

Puppet is the most mature and probably the most approachable of the four from a usability standpoint, though a solid knowledge of Ruby is highly recommended. Puppet is not as streamlined as Ansible or Salt, and its configuration can get Byzantine at times. Puppet is the safest bet for heterogeneous environments, but you may find Ansible or Salt to be a better fit in a larger or more homogenous infrastructure.

Chef has a stable and well-designed layout, and while it's not quite up to the level of Puppet in terms of raw features, it's a very capable solution. Chef may pose the most difficult learning curve to administrators who lack significant programming experience, but it could be the most natural fit for development-minded admins and development shops.

Fuel and Autopilot are relatively new tools that, though built exclusively with OpenStack cloud structure in mind, are not as mature as the other tools mentioned above.